Increasing pedestrian safety through smart tones

The advent of Electric Vehicles has brought a myriad of benefits to the consumers that drive them, the cities that host them and the environment. Pedestrians seem to be the only ones caught in the crossroads, as the lack of an engine sound in said vehicles has proven to be an ever-growing risk for the people around it.

With new regulations set to kick in in 2019, it's time for the auto and technology industry to seize the opportunity to put pedestrian safety first via a smarter, more meaningful use of sound, rather than defaulting on replicating motor or the already out-of-date combustion engine sound.

By using increasingly available data from sensors, as well as shifting the focus on pedestrian's needs, a team at ustwo New York has explored different auditory concepts to communicate risk to pedestrians, with the goal of creating a safer relationship between them and the cars of the future.

An Introduction: The Pros and Cons of EVs

Electric Vehicles (EVs) are increasingly becoming a common sight in roads and cities around the world. EV sales grew by 41% globally in 2016, and their prominence on roads is projected to grow exponentially in the following decades. The benefits these vehicles are bringing to society are many, for example the reduction in CO2 emissions and better fuel economy; but there’s one important issue on which the spotlight has been growing for quite some time: EVs lack discernable sound when they’re operational due to the absence of a combustion engine.

Although this might be a positive aspect of EVs for many (in the form of less noise pollution), it has become an increasingly dangerous factor for pedestrians, especially cyclists and visually impaired persons. A 2011 study by the National Highway Traffic Safety Administration (NHTSA) shows that hybrid and EVs are 37% more likely to cause accidents involving pedestrians, and 57% more likely to cause accidents involving cyclists.

This leaves us with the problem we face today: With a lack of engine sound, pedestrians are having an increasingly difficult time knowing when electric vehicles are near, which results in an inability to recognize and prevent dangerous situations.

In order to address this issue, several countries have passed regulations and guidelines which mandate that all EVs must emit a form of sound when driving at slower speeds (this is not an issue at higher speeds, as the sound of wind and tires is present). In the United States, the NHTSA recently passed a rule that brings the same sound requirements to “all hybrid and electric light vehicles with four wheels...traveling in reverse or forward at speeds up to 30 kilometers per hour (about 19 miles per hour)”.

Auto manufacturers are already hard at work adding digital sounds that replicate an engine noise, usually through the use of external speakers, in order to comply with this rule when it becomes active in 2019. This approach uses a well-established idea that the revving sounds of an engine communicates danger, and car OEMs (Original Equipment Manufacturers) are taking advantage of this to increase the safety proposition for EVs and, in some cases, keep a familiar “thrill” that speaks to their brand style and proposition.

Considering that this law was passed to directly address and improve pedestrian safety, we asked ourselves: is this approach the best approach to solve this issue? The increasing use of technology inside and outside the car is quickly opening up possibilities and features never seen before in vehicles, such as autonomous driving and collision avoidance capabilities. These new systems are taking advantage of sensory data to make better, more informed decisions when it comes to safety.

At ustwo, we’re driven by constant exploration and experimentation to address current issues that have a meaningful impact on people’s lives. Our user-centered, research-backed focus, as well as our deep passion to build, test, and validate our ideas, serve as a great platform to explore a different approach for the future of external vehicular sounds.

Realizing the potential a smarter use of sound has to dramatically improve pedestrian safety, accentuated by a collaboration with music and sound experts Man Made Music on the possibilities of the medium, has led us to an exciting challenge that our team is all too eager to tackle head-on. Simply put, we believe there is an opportunity to rethink the role of electric vehicle sounds when it comes to car/pedestrian interactions.

In this post, we aim to look at current solutions being implemented by OEMs, discuss our perspective on what the future could look like for external vehicle sound, walk you through our experiments with possibilities, and share our experiments and observations from the project.

The Current Landscape

In order for us to look at the opportunities of using new types of external vehicular sounds to improve pedestrian safety, we believe it's important to look at how auto manufacturers are currently tackling this issue for EVs.

Auto OEMs have been working on this problem since the late 2000s, just prior to the first commercial wave of EVs launching and initial concerns of pedestrian safety were starting to appear. One of the first examples of a solution was the Nissan Leaf’s VSP System. This model came with 2 distinct sounds; an “electric motor” type of sound for when the car is accelerating forwards, and an “intermittent”, beeping-type of sound for reversing.

Since then, car manufacturers like Renault and Fisker Automotive (now Karma Automotive) have followed suit by creating external sounds which attempt to replicate the sound of an engine or motor. Using this approach as a starting point, OEMs are now “designing” these emitted sounds to be a representation of their brand, as we can see Audi doing in this video:

Embedded content: https://www.youtube.com/watch?v=4IumQkKXdBs

Since most car manufacturers are choosing this particular route as the preferred solution to address pedestrian-related safety concerns, let’s take a closer look at engine-like sounds and how they’re used.

Internal combustion engines have been used to power vehicles for more than a century. This has resulted in car-related engine noises to become deeply ingrained in modern culture and people’s everyday lives, and serves as the primary benefit of keeping them for future vehicles: They’re already familiar to pedestrians. With engine sounds, there’s nothing new to learn; the approaching, ever-increasing sound of an engine communicates that a vehicle is getting closer to one’s location. This has become the clearest representation of danger we can get from a moving vehicle, and one of the primary ways pedestrians gauge their level of safety in relation to it. Currently, OEMs are using this well-established convention as one of the reasons to carry over engine-like sound to modern EVs.

When it comes to alerting pedestrians about vehicle-centric danger, another common sound source is the horn. Horn sounds usually serve as a clear, straightforward method to communicate potential danger to unsuspecting pedestrians nearby. Along with engine sounds, they’re also a familiar staple in modern city soundscapes.

Although engine and horn noises are the most common ways a vehicle can communicate safety-related messaging through sound to pedestrians, we start running into issues if we want to have a deeper understanding of the situation and the level of risk a pedestrian is actually in. Engine noises help us, at best, to make estimations on where the car is in relationship to us and how fast the car is moving, while a horn has both a lack of clear message (Do I get out of the way or do I stop moving?) and a clear recipient (Are you honking at me or someone else?). The truth is, both methods could greatly benefit from the additional amounts of information that cars are starting to gather (such as pedestrian and vehicle recognition, projected paths, road conditions, amongst others) as a result of the auto industry’s focus on autonomous driving in order to enhance pedestrian safety. Additional issues arise when we take into consideration the large amounts of noise pollution caused by the myriad of cars driving in and around cities.

Finally, we come to the ever-increasing reality that combustion engines are slowly becoming dated technology. Is replicating the sounds of the past the right way to go, or should we be innovating? Kevin Harper, our VR Engineer on the project, put it like this:

"Recreating and adding a combustion engine sound to electric vehicles is like adding a horse's neigh to the first cars ever made!"

Although a bit hyperbolic, this statement contains some interesting points. Engine sounds are a result of the internal mechanics of combustion engines. If current vehicles (and the vehicles of the future) are starting to be powered by clean energy and ditching combustion engines altogether, should we be using a soon-to-be-legacy sound as the ideal solution to this problem?

Recreating and adding a combustion engine sound to electric vehicles is like adding a horse neigh to the first cars ever made!

At ustwo, we believe that this technological juncture, where EV and autonomous cars are increasingly gaining ground into the mainstream, is the perfect opportunity to question these ways of thought and look at this problem from a different perspective:

- What are internal combustion engine sounds actually trying to communicate in a vehicle/pedestrian context?

- What information is actually useful for people to receive from vehicles around them when walking or cycling through the streets?

- With new vehicle technology becoming increasingly more available, is an engine-like type of sound the best approach to communicate risk?

- Is there a more modern, user-centric approach we can explore?

Developing A Perspective: Initial Research

As with any new automotive-related projects we delve into, we started by conducting initial research that would guide us in understanding all aspects that are relevant to vehicle sounds. This challenge in particular spreads across many different intersecting fields. Among those, we can find:

- Automotive branding: all vehicle-emitted sounds are carefully considered to embody an OEM’s brand characteristics and legacy. There is a major opportunity for safety-related sounds to follow suit.

- Urban soundscapes and population well-being: adding (or removing) any sound to an urban soundscape has the potential to impact cities in both positive and negative ways. Modifying an established soundscape should aim to reduce noise pollution, support human health and create better cities for its inhabitants.

- Sound Design: sounds can be an effective way to communicate information to its recipients. New external vehicular sounds have an opportunity to communicate more to people around them.

Any new concepts or ideas for vehicle-related sounds will both draw insights from, and impact, all of the fields mentioned above.

In addition to constant research, we involved field experts to add a grounded perspective throughout the process, as well as to gather input to our thinking and initial ideas. One of these experts was Myounghoon "Philart" Jeon, Professor at the Department of Cognitive and Learning Sciences and Department of Computer Science at Michigan Tech University. Professor Jeon is also Director at Mind Music Lab, a transdisciplinary research group within the university.

We spoke with Professor Jeon during the initial weeks of the project, and he shared his belief that safety has priority over the brand side when designing external vehicle sounds. Moreover, he proposed that there is an opportunity for these sounds to “provide some information on what the car is doing.” During our interview, he elaborated on his perspective:

When we design [automotive] sound, we need to balance between traditional safety and usability vs aesthetics and brand language. For this case specifically, safety may have priority over the other aspects, because [vehicle vs human] is a really critical point.

We also spoke with Paul Jennings, Professor in WMG, University of Warwick, and asked about his thoughts on the current solutions implemented by car manufacturers. Speaking about the impact of keeping engine-like sounds would have on cities, he mentions that the opportunity to reduce noise pollution is an important one to consider:

"When you think about it from a soundscape perspective, [it would mean] that the future soundscape would be identical to the current one. That seems to be a big disappointment, because the chance to… reduce the adverse effect of traffic noise seems to be a great one."

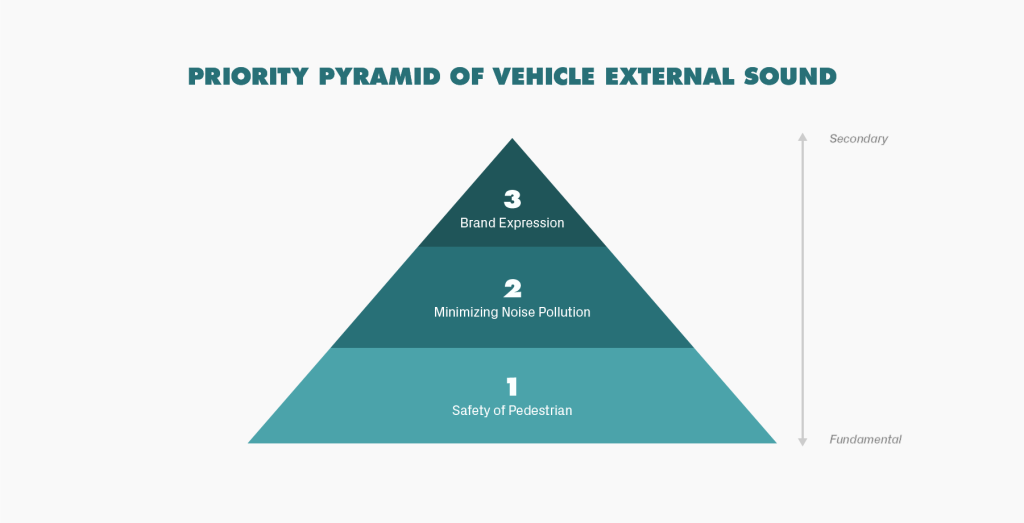

Using what we learned over our initial round of research, as well as the input from our expert interviews, we created a principles pyramid that covers the 3 key aspects we identified to be important to consider during this project:

Even though all three aspects are of high importance, our research led us to prioritize them based on current impact on the population’s needs. More specifically:

- Safety of Pedestrians/Drivers: Safety is the fundamental aspect external vehicle sounds should focus on. Any type of audio system should take advantage of all sensory data available to them in order to decrease any potential risks.

- Minimizing Sound Pollution: With the advent of EVs, we believe we are at a crossroads where we can shape future city soundscapes to become more pleasant and less convoluted with traffic-related noise. Considering how noise pollution impacts human health, we believe is an important aspect to consider.

- Brand Expression: Even though we’re big fans of using sound design as an important way for car manufacturers to create stronger and more exciting relationships with their customers, we believe that external vehicular sounds should support the wellbeing of people and the environment, first and foremost.

In addition to these key aspects, we believe that taking advantage of as much data and information as we can gather from the cameras and sensors included in vehicles is a vital opportunity that’s not to be overlooked. This is particularly important, as the number of sensors being added to cars is constantly increasing. Professor Jennings weighs in on this topic, stating that since “the capability of sensing technology is improving so quickly, it might be more sensible to consider using it to help assess risk. That risk is important.”

While we were developing our initial perspectives on this topic, we knew that understanding the role of sound within this issue was paramount. We reached out to Man Made Music to give us their thoughts on the opportunities and challenges of sound within the pedestrian/vehicle context.

“It’s important that we build on what’s intuitive”, said Joel Beckerman, Founder of the New York City-based strategic music and sound studio. “As human beings, we are wired for sound, and we count on it in our daily lives to help us learn, navigate experiences, and connect with the world. I refer to this as Sonic Humanism.”

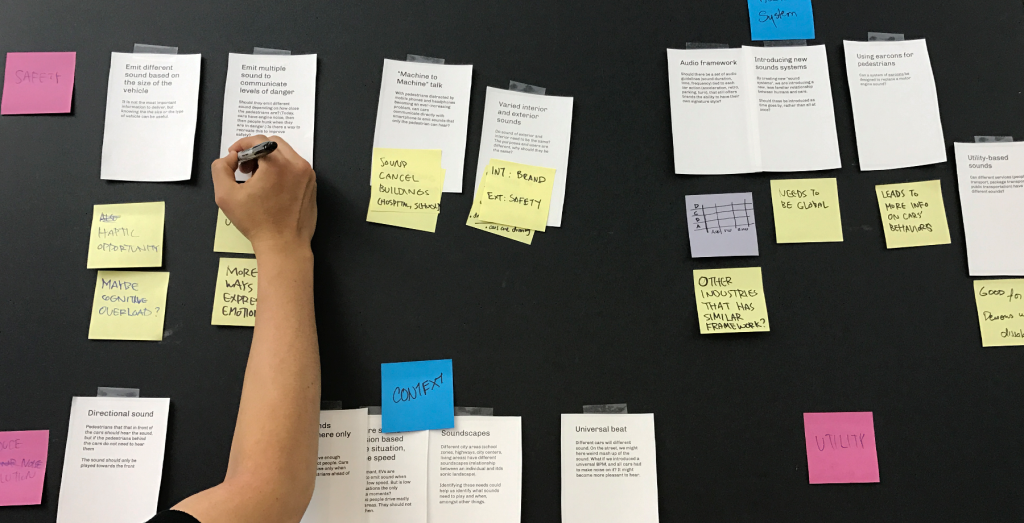

As we started diving deeper and gathering more knowledge, dozens of exciting opportunities and initial thoughts began surfacing within the team. Following ustwo’s mantra of getting our hands dirty as soon as we can, the team got together and started discussing some of these thoughts, turning them into cohesive group of ideas.

These initial concepts would serve as a starting point to identify interesting possibilities, while we continued to develop, strengthen or disregard them via additional research and iterative work.

Exploring The Possibilities

Thinking about the future of vehicular sounds is no small task. Exploring a topic like this can be as broad and daunting as it can be exciting. After sorting through our initial ideas, which took us from technical frameworks to utopian scenarios where technology is always in sync, we realized that the possibilities to reach for the future and the opportunities to innovate are many. The team identified similar characteristics within our ideas and proceeded to group them based on three key themes: Context, Safety, and Audio Systems.

- Context: Contextual empathy can ensure that the right sounds are played at the right times. The team envisioned different ideas where vehicles are constantly aware of their surroundings and what their role is within the soundscape (or different soundscapes) of a city.

- Safety: These group of ideas use different sounds and alerts to communicate more information to pedestrians about their safety in a variety of ways.

- Audio Systems: All ideas that relate to the actual sounds that would be emitted from the vehicle, including audio “guidelines” and implementation strategy.

These 3 themes are fairly interesting in their own right and, unsurprisingly enough, surfaced many different and exciting routes to explore. In order to find our focus, we reminded ourselves about our initial goal: To explore a potential solution that would directly address the ruling passed by the NHTSA. We went back to our initial brief and, in good ustwo fashion, we asked ourselves: Which one of these initial set of ideas could have the most impact on increasing pedestrian safety? This question helped us categorize our ideas with a more focused perspective, which led us to arrive at the following breakdown:

Using the diagram shown above, we prioritized our ideas by using pedestrian safety as the primary focus, as this is the goal of the ruling, and a reduction of noise pollution as the secondary focus, as we believe this ruling is an opportunity to improve the urban soundscape, thus increasing people’s wellbeing.

In addition, we simplified our thinking even further: we believed that the best approach to increase pedestrian safety was by using smarter sounds (sounds that communicate more and/or carry more meaning), while the best approach to reduce noise pollution was by using less sounds (a vehicle emitting sounds only when it really needs to).

After this exercise, our core concept became clear: We believe that an external audio system that is context-aware could be a better solution to increase pedestrian safety. This system would take into consideration the following aspects and data points to produce different types of sounds:

- The car’s actions (moving forward, turning right, parking)

- The car’s speed and projected path

- The type of pedestrians around it (strollers, canes, cyclists)

- The number of pedestrians around it

- The pedestrian’s proximity to the vehicle and their projected path

- Environmental conditions (rain, snow)

- Location (type of city, culture)

- Setting (schools vs. highways)

Arriving at this concept, although still broad, gave us an anchor for selecting an idea that would cater to the brief and could be tested. In the end, the team chose an idea that used sounds to communicate a pedestrian’s risk level, as it was the most tangible and impactful representation of this context-aware system concept.

Our Approach: Work Rooted In Concrete Design

After selecting the risk-based sounds idea to further explore and develop, we wanted to understand pedestrians’ expectations when it comes to their safety in the streets.

We started by mapping out and discussing our assumptions on how pedestrians perceive danger, their surroundings, and what information they need to receive from a vehicle to have a strong sense of their level of safety. We also included our ideas on what cars should communicate to people around them in order to support these needs.

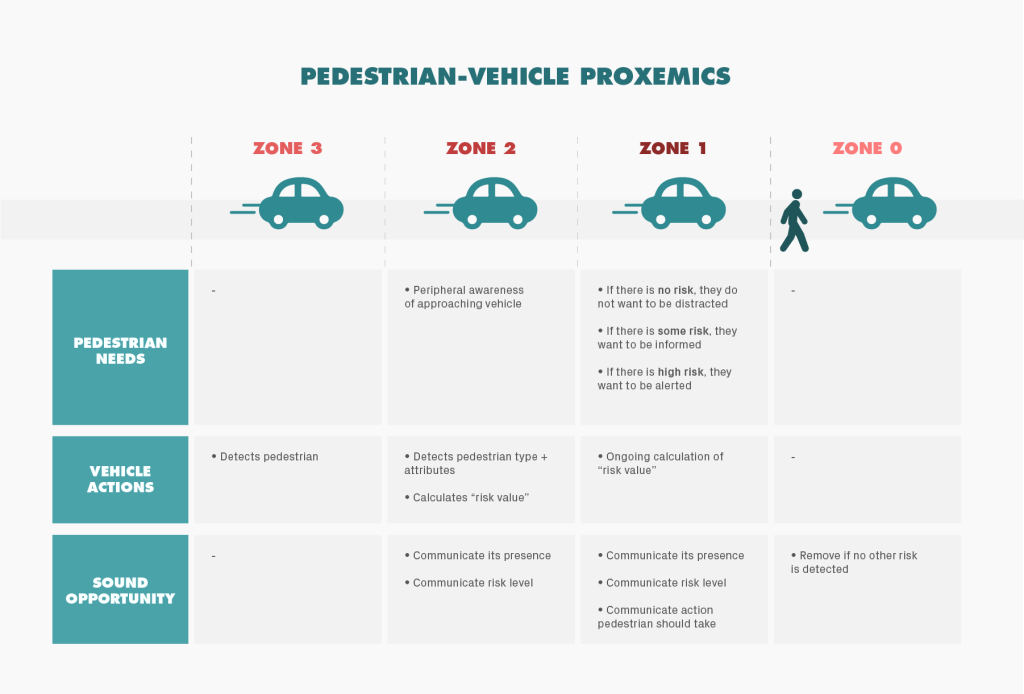

Our result from this exercise was a “risk zones diagram” that mapped out pedestrian needs, the vehicle’s supporting actions, and any potential sound opportunities.

This zoning, also known as proxemics, allowed us to understand pedestrian needs based on their proximity and awareness of an oncoming vehicle. The zones, from furthest to nearest, consist of:

- Zone 3: The vehicle detects the pedestrian in the distance, but knows that there’s no immediate danger.

- Zone 2: The pedestrian wants to know that the vehicle is approaching, and expects a vehicle to communicate its presence. The vehicle should start using all available data to understand its surroundings and the pedestrian in order to make a more informed decision when it needs to.

*- Zone 1: The pedestrian wants to know if the vehicle poses any immediate danger and, if if it does, it expects the vehicle to communicate the level of risk their position/trajectory puts them in.

- Zone 0: The vehicle has passed and is no longer interacting with the pedestrian. All sounds stop.

In order for this system to work like it's intended to, vehicles would have to rely on as much contextual information as they can gather from sensors and cameras. It’s important to note that one of the team’s goals was to design a potential solution that could be implemented in the near future, but could scale and evolve for needs beyond the next five years. Technology that is already available by the way of systems like Tesla's Autopilot, offers the perfect starting point to start exploring this concept.

Currently, the primary purpose of Tesla’s system is to allow the car to drive in autonomous mode. It can detect road lines, in-path and outside-path objects, road lights, and traffic flow to help the car obtain a clear understanding of its surroundings.

Embedded content: https://vimeo.com/192179726

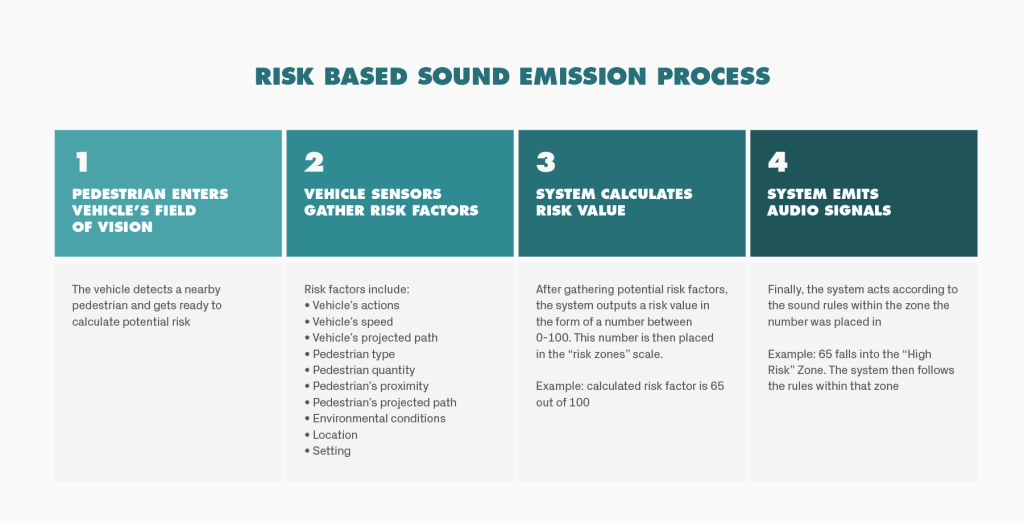

Relying on vision and sensory systems like these would allow a context-aware external sound system to gather the information it needs to calculate, create, and emit a sound that best communicates the level of risk a pedestrian is in. In order for this audio system to support these “risk zones”, we created an initial risk scale that the vehicle could use to determine the type of auditory signal to be emitted:

This “risk scale” would divide the zones by percentage ranges within a scale from 0% (no risk) to 100% (most risk). For example, the “low risk” zone would start at 0% and end at 25%, the “mid-risk” zone would start at 25% and end at 50%, and so on.

Now, why a percentage-based scale? Our initial thinking around how this process would work is the following:

Once we started adding more definition to our concept, we realized that prototyping and testing a context-aware system like the one we were envisioning would be a very complicated task. Even though the purpose of this project was an exploratory one, we knew that we still wanted to find a way to test the most simple representation of our idea.

We wanted to learn if pedestrians could gauge different levels of risks through vehicle-emitted sounds, so we started with the most simple interaction within this context: A car driving in front of a pedestrian while emitting different types of sound alerts. This scenario would serve as the basis of our tests and a way to learn first-hand about our ideas and assumptions.

Building An Experience

As product designers and developers, we are able to make well educated guesses on how humans will feel and act when using our products. But we never know how people will react until the moment you put something in front of them. This is especially true with new and undefined HMI. Because of this, we highly value building prototypes to test our ideas and assumption.

There were many elements that we wanted to discover, but the most important of all was the question, “Can pedestrians feel safer if vehicles communicated the levels of risk through sound?” We created four different simple sound concepts, so that we can test them by building a prototype designed to gather data around this question.

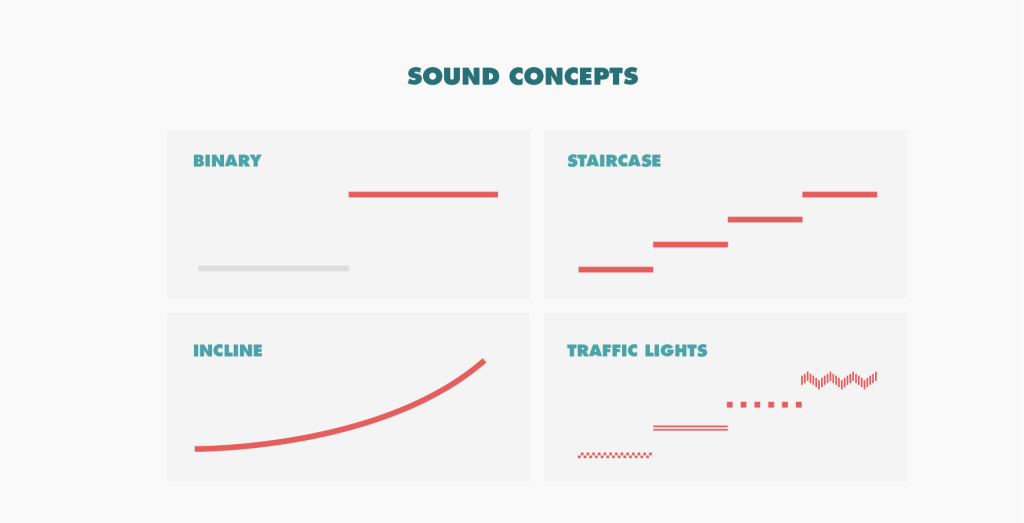

These concepts were:

- Binary Concept: Communicates risk in a binary manner. There is either no risk or high risk. In other words, this does not deliver different levels of risk. We purposefully added this simple concept to compare against more complex ones. Our goal was to learn if there was value in communicating different levels of risk, rather than just one.

- Staircase Concept: Communicates the level of risk in four distinctive levels (low, mid, high, and collision) by altering different characteristics of the same sound source.

- Incline Concept: Communicates levels of risk in an incremental manner. Change of the sound intensity is gradual. There is no point where the sound intensity changes dramatically, unless the risk value changes quickly. An example of this is the current reverse tone in the vehicle that communicates proximity to an object behind it.

- Traffic Lights Concept: Communicates levels of risk by using completely different sounds for each level of risk. A good visual representation of this would be the traffic lights, which use different color to communicate different things

We investigated various ways to test our ideas. One idea was to simply install speakers on the body of a rented car. It is the closest to reality if our thinking were to be implemented, but it was time consuming, inflexible, and unsafe, the latter which of course was what we were ironically trying to improve.

We also looked at virtual reality (VR) as a potential solution for this. Currently, businesses are finding significant value in using VR as a cost effective prototyping tool. A few successful examples of this include architecture and manufacturing. Anders Oscarsson, ustwo’s Product Designer focused on VR, observes this trend;

“VR technology enables us to create simulations of the future. Before VR, these future visions could only be explored in the form of conceptual sketches or costly physical prototypes, but now we can actually experience them by using virtual technology.”

The automotive industry is no exception. OEMs are taking advantage of VR as a prototyping tool. For example, Volkswagen utilizes VR technology for product development throughout the group, including Bentley. For them, the the use of VR technology is “... a response to the increasing number of models, versions and variants, and reduced development times that characterize today’s automotive product development process.”

In a similar name of rapid iteration, we believed virtual reality would be the most appropriate tool for us to utilize, as well. Some of the reasons for this were:

- Safety - We were testing functionality that would be used when people were at risk. But realistically, we could not expose people to actual risk simply for testing purposes. By using VR simulations, we could recreate risky situations while avoiding participants actually being placed in harmful situations.

- Ease of iteration - Every product has room for improvement. This tool is no exception. We needed to be able to make changes to the way we test. With VR, this can be done via lines of code, rather than altering anything physically.

- Creating a controlled environment - In order to test something, it would be better to reduce the number of variables that could affect the test results. By using VR, we were able to control the environment because the experience was programmed.

- 3D audio technology in VR - There were great plugins that allowed users to hear high fidelity 3D audio in VR. They helped us recreate perception of the sound in the real world. Thanks to teams like Two Big Ears (Now part of Facebook) and DearVR.

- Accessibility - As long as we had the right gear, we could easily recreate the testing environment at any moment.

We now had the environment and the system, but well considered sound design is necessary in order to have meaningful results. Man Made Music, who provided us with their perspective at the beginning of the project, shared our interest and passion for this experiment, in addition to the fundamental purpose of creating a safer environment through sound. We worked together to find the appropriate sounds to use for our concepts.

Their team approached this challenge by creating a series of sonic design sprints where they investigated taking various sounds--a bell, for example--and manipulating a fundamental of its tone (pitch, repetition, volume, etc.) to create a palette of four audio files with increasing intensity. These aligned with our four zones of risk hypothesized ("no risk", "low risk", "mid-risk", and "high risk").

For example, we would increase the number of repetitions of a sound while keeping the pitch the same. (Bells in the playlist below) Another example included keeping the number of repetitions of a sound the same, but increasing the number of notes heard each time. (Soft Alarm in the playlist below)

Embedded content: https://soundcloud.com/ustwo-fm/sets/ev-external-sound-design-exploration-sample

While working with Man Made Music, Also, the teams considered the behavioral concept that perception is based on the detection of change. In humans, the brain filters out any unchanging stimuli, so to signify increasing risk, a change in sound must be heard. They recommended we test a variety of sonic changes (pitch, tempo, volume, contrasting sound) and study which most effectively communicates change in risk to a listener.

ustwo collaborated closely with a talented team at Man Made Music, including Dan Venne, Joel Beckerman and Kristen Lueck (not pictured). Photo by Mickey Alexander.

Putting Our Experiment To The Test

In order to test our new VR experience, we set up shop in one of our meeting rooms and ran the app on our HTC Vive. There were eight participants, all of whom were employees of ustwo New York studio. (This of course is not an accurate representation of the population, but we believe there is value in receiving feedback from a casual experiment, and using it to help us make decisions moving forward.)

We first informed participants that for this test, we were primarily looking for feedback on our alerting sound concepts, and wanted their full attention on said 3D audio. As such, their vision in the experiment was to be impaired, and they wouldn’t be able to see; only hear. With this knowledge, our testers entered our VR experience.

After participants became familiar with their surroundings through the white noise, we prompted a virtual vehicle drive by the participants with alert sounds. We had the car drive by four times, emitting different alert sound with every pass. Afterward, we asked our testers to describe the sound they heard, and compare it specifically to risk communication.

Here are sounds that our testers heard during the experiment: (Headphones are highly recommended)

Embedded content: https://soundcloud.com/ustwo-fm/sets/ev-external-sound-tested-concepts-3d-audio

The results of these tests were incredibly insightful. While we do not have concrete and clear indication of which concept was the best, we can safely confirm that many of our small, instinctive hypotheses were leading us in the right direction. Here are four key learnings from this experiment:

- Intensity alone does not signify risk - With a gradual build of intensity through volume, speed of notes, pitch, etc, the respondents felt there was a greater change in proximity or speed, not risk. While that's important to know, an increase in the intensity of the sound doesn't alone signify risk, which was the purpose of the test.

- Sudden sound change communicates risk - A sharp contrast between sounds seemingly signifies a change in the amount of risk more clearly than compared to sounds with a gradual intensity build.

- Musical or melodic sound may become a distraction - When it came to musical tones, respondents claimed their sense of risk was lowered, due to the tones feeling instrumental and familiar, as if from a song.

- People perceive risk differently - Although participants heard the same sound, their perception of the risk varied slightly. This suggests that a degree of education may be needed.

While these key learnings are not surprising given our understanding of music and sound, it was an opportunity for that knowledge to be reinforced through a fast and iterative process. Because of this, we considered our experiment a success.

Results, Insights, And A Look At What's Next

When we started this journey, we anticipated the solution to electric vehicle’s lack of sound might wind up being similar to the sounds emitted by parking sensors. However, after weeks of research and testing, what we found was that our dilemma was much more complex, and finding a solution wouldn’t be so simple.

While we’ve gained a lot of insights along the way, our team has come to the realization that we’ve only just begun to scratch the surface of this issue. More ideas, research opportunities, test, and iterations are needed. As we look toward the future, and what could come next, there are a few stand-out examples of specific areas we’d love to delve into for our next research phase:

- Do pedestrians want four levels of risk? Maybe fewer risk levels are better. - In our test, we had four zones (low, mid, high, and extreme risk), and the vehicle made different sound based those zones. Fewer number might allow pedestrians to recognize the sound/message, perceive danger, and take action, quicker.

- How do these concepts actually influence the reaction time? - Ultimately, this system is successful if pedestrians can react quickly enough to avoid collision or high risk situations. So the reaction time is important. We need to start gathering data on it. In our VR experience, there is a feature called “Guide Mode”. This provides small distraction to the participants, therefore it creates a situation in which vehicle will pass by unexpectedly.

- How will the car handle more complex scenarios? - Our simple scenario consisted of one car and one pedestrian in a sunny clear day. Would the sound be emitted differently if it is in a thunderstorm? Or middle of a festival with million people? Stress testing would be necessary in the future.

- What if we combined Traffic Light Concept with Incline concept? - Traffic Light Concept seemed to get attention. There is an opportunity to communicate more in each level. What if each risk sound intensified to communicate risk in more detail?

- What if the external sound was verbal communication? - In some case, pedestrians may need to make actions in order to avoid collision. Verbal communication could deliver commands more clearly than abstract sound. It could be useful especially for the high risk zone.

Our partner, Man Made Music also envisions areas for further exploration from a strategic sound design perspective. They see two immediate areas to investigate. The first would be to look at how a "low risk" sound could help a pedestrian determine the location the vehicle was coming from - and they have a few starting points for that idea. The second would be to look at what type of "high risk" sounds communicate action. While we discovered respondents knew to be alarmed with the "high risk” sounds, they didn't know exactly what to do about it. We would want to explore how to communicate both "high risk" and action together.

We started researching external sound of EVs because we were fascinated by the new challenges and opportunities. As soon as we started to understand more, this topic became deeper and deeper. At the same time we discovered even more opportunities than we anticipated. We see opportunities to make pedestrians safer and make cities more quiet. This time, we explored a small idea to increase pedestrian safety through communicating risk, using all of the information that cars can get. This element of communication is increasingly becoming important as we enter the era with robots transport us from one place to another, in other words autonomous vehicles. We need to be able to communicate with them so that we all understand their intentions and next actions. For designers and developers today, this is our challenge.

Download The Experiment

As always with experiments we do at ustwo, our teams like to share and contribute to the communities we’re a part of, and our experience with creating sound concepts for electric vehicles is no exception. As long as you have HTC Vive set up, you can use this VR app to quickly test some sounds being emitted from a moving vehicle. This should be a great tool for sound designers working on external vehicle sound.

Download for HTC VIVE - ustwo's External Vehicle Sound Experiment VR App

Embedded content: https://vimeo.com/211233094

Final Thoughts: A Postscript for OEMs

Historically, the engine sound of a vehicle has been part of the brand expression. All brands should strive to create an emotional and personal relationship with the customers. The engine sounds, in the most part, have been designed for the owner of the car. For some car owners, the sound of the engine have been the key factor to purchase the vehicle. However, we are moving into an era of car sharing. This might mean that more and more cars will be perceived as devices for mobility rather that something that represents the personality of owners. Then the question is, who will OEMs be expressing the brand to, through external sound. The answer might be the people who are outside of the cars. We think that they care about safety, functionality, and pleasant soundscape without noise pollution. Perhaps being quiet is the loudest way to express your brand values. This might be the time for OEMs to rethink the purpose of the external sound in general.

If you're ready to challenge the landscape of external vehicular sound, or simply want to explore the future of mobility, get in touch with us at evsound@ustwo.com.

Header illustration by Gwer