Seeing that machine learning (ML) is all the rage right now, I decided to jump on the hype train and see where it took me. I soon discovered that it wasn’t like learning a new JS framework or the next magical CI solution. Instead, it was an entirely new world of headaches and possibilities that would make me re-evaluate how I approach problems all together.

As a developer, discovering how much abstract thought and statistics happen in ML was as close to a slap in the face as it gets. But, as someone who doesn’t know when to stop, I continued pursuing ML enlightenment. By pooling various resources from the likes of Reddit and Coursera, I managed to hack together a simple roadmap of the concepts I wanted to understand.

This roadmap was only the start, but same as with school I’ve never been too keen on learning the ‘standard’ way. So, true to form, I set out to build something with the intention of learning along the way.

So, Whats The Plan?

Technology has a huge potential to help people, I am particular interested how it can do so in the field of mental health – as ustwo have already started to explore. From the Moodnotes team, I have learned how hard it is keep a clear head whilst leading such busy lives and juggling a thousand different responsibilities. This concern inspired me to create a ML model capable of analysing the mood of any particular song. With it I hoped to be able to predict a person’s emotional state based on their recently played songs.

Imagine the possibilities if you knew, or could at least accurately guess, someone’s mood. If your user is feeling down why not send an encouraging message? Why not connect people feeling the same way so they can support each other? Target ads based on mood… maybe not. Whilst there are definite moral considerations, the sky's the limit for this sort of technology.

With this in mind, I separated the project in four – in hindsight pretty ambitious – stages:

- First is creating a model capable of analysing a song’s lyrics and determine the likely emotions behind the text.

- Followed by another model capable of analysing the song’s melody with the goal of adding another level of complexity and accuracy.

- Then I would have to find a way to join the two models using the magic of mathematics and hopefully get a refined level of emotions attached to a song.

- Finally I would apply data science strategies to the data collected to build a 'global' context and improve accuracy.

In the words of James Governor, ‘Data matures like wine, applications like fish’ so if I wanted something truly helpful – I had to make sure there was data to feed the machine.

Getting Started

As I kicked off, the plan seems simple enough – use sentiment analysis for the lyrics! What could go wrong?

Firstly, sentiment analysis tends to be quite binary. This is excellent when you’re trying to analyse if the tweets that your controversial startup receives daily are positive or negative. But what if you want more information? Like if your users are angry, sad or beaming with joy? What if they’re angry and sad at the same time? This is where it gets tricky.

Following the most accepted emotion theories, there are six primary emotions: anger, disgust, fear, happiness, sadness and surprise. With so many options our needs are way beyond a simple binary analyser.

Now, the way I usually tackle building products is trying to get something out there as fast as possible by relying on what tools are at my disposal and then refine as needed. In this case, I thought about relying on the existing strengths of binary analysers by building a separate mood analyser per emotion.

They would be capable of reading the song’s lyrics under the light of their respective emotions and determine the likelihood of it being said emotion. Then, I would have to find a way of mathematically unifying those results into one coherent answer. Something like: 80% anger, 10% happy, 5% unknown. However, this approach wouldn’t necessarily guarantee actual emotional accuracy since it’s very ‘modularised’.

After going through a lot of papers, articles and videos, I realised that using binary analysers only promised to help me get something out there quickly – instead of actually creating something and learning. It became clear I was in way over my head for a learning project. I couldn’t approach such a scientific field so casually, as much as that hurt my developer’s pride. However, not all hope was lost, during my research I discovered IBM’s Watson which inspired me to readjust my original, admittedly over-complex, four-stage plan.

You Know My Methods, Watson

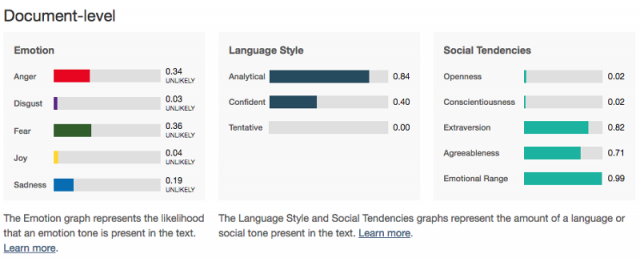

For those of you who don't know, Watson is a system made by IBM that uses machine learning to analyse unstructured data and answer questions posed in natural language – and is significantly better than anything I will be creating this year. Basically, one of its features is a remarkable sentiment analyser that does exactly what I was trying to do. So I did what any logical person would – Googled the saddest song of all time and inputted its lyrics.

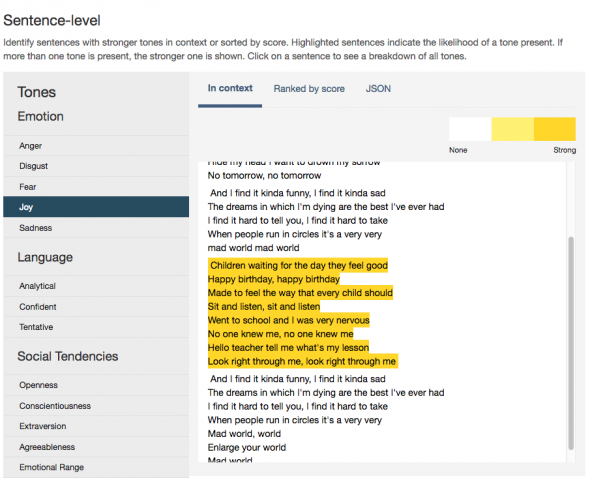

Gary Jules’ ‘Mad World’ left Watson indecisive. Maybe angry, a bit afraid, kind of sad. Things were not looking great until I started to really analyse lyrics under a less technical light and took a deeper look into actual sections of the lyrics.

Here I began to notice something problematic with this approach – children singing happy birthday doesn’t say ‘saddest song in the world’ to me. This same pattern repeats with every emotion and it led me to a bothersome theory: text itself is emotionless and the emotion is conveyed by the reader. Subjectivity, the bane of machine learning.

But what about that time I cried when I read The Fault in our Stars?

What if you had never experienced suffering or a loved one’s death? Would you be able to relate to the story? If you read the book under a context that understands the loss of someone, then you can definitely be swayed into feeling sadness. But if you didn’t understand emotions, as if you were a machine, you would only be capable of reacting in the way you were told to.

Around the time I was tackling these problems, I was reading about one of my favourite directors and discovered something that gave clarity to these big questions. Hayao Miyazaki, the mastermind behind films such as Princess Mononoke and Spirited Away, believes stories should be told by interactions and not through dialogue. To him, dialogue is simply making explicit the emotions carefully imbued in the scene. To do this, he creates entire scenes before adding any dialogue. This means the viewer should know what’s happening just by through characters actions, scenery, the score and other nonverbal cues.

Knowing this, we can see that there’s usually a lot more context around a conversation than just the spoken words. This is why just trying to analyse text will never be 100% accurate. There is an entire world of context outside the few lines a song contains and not observing it will lead us nowhere.

Going Forward

Based on my experience up to this point, I had a feeling I might face the same challenge with determining a tune’s emotions. That’s why I’m going back to the drawing board when it comes to stages 2, 3 and 4 from my original plan. I will now approach them as emotional problems, not just a technological ones.

As Mike Townsend said, 'Machine learning is like highschool sex. Everyone says they do it, nobody really does, and no one knows what it actually is'. After this little side project, this was the first thing that came to mind.

Realising the error in the way I tackled this problem has been enlightening. I was focusing entirely on the final product and saw everything at my disposal as a tool to be used. Machine learning should be approached with dedication and care – it’s not a tool but rather a whole new paradigm to solve a diverse set of problems. Maybe once this relatively new field matures more we can start using certain areas as tools in the form of easy to use APIs, like Watson or one of the hundred tweet analysers out there. But until then we will have to roll up our sleeves and get deep in the machine.

By sharing my efforts, I am hoping that those with expertise may be able to shed some light onto the problems I faced during my experiment. I’m a definite newbie in such a fascinating field and a firm believer that there’s wisdom in any nugget of knowledge or criticism.

This post is edited from a version published on Medium - you can follow him here. For more on machine learning and data science why not check out this blog post on data science, machine learning and…avocados and watch this to get some more insight into how Hayao Miyazaki inspired this post!