In this post we see the autonomous vehicle design principles we share in our latest book Humanising Autonomy: Where Are We Going? in action. Keep an eye out for the design principles we applied during this thought experiment thorough out the blog – and download the free ebook to see the 21 principles in full.

WHAT ROLES WILL WE MISS WHEN THERE IS NO LONGER A DRIVER?

Taxi services are symbols of our great cities, with their little quirks and behaviours. Some of these quirks are even embedded in the vehicles themselves – for example, a London black cab must be tall enough to accommodate someone wearing a bowler hat!

A certain sense of exclusivity also comes with the black cab, from years of regulation and the intensive training to gain “The Knowledge” required to become a cabbie. The iconic London black cab is also efficient, safe, comfortable, has informative drivers and is generally quicker than the average car (as they can use bus lanes). They also double as a tourist attraction in their own right.

Hailing a black cab is a ritual for anyone in London. It is a great leveller – everyone does it the same way, with a wave of the hand. Once the driver finds somewhere to stop next to you, you tell them where you need to go, hop in, and a verbal contract is made. Whoever you are, the black cab will get you there – quite an egalitarian service.

In essence, hailing a cab is a series of human interactions – a wave, shared glances and nods, hunched verbal negotiations – mediated by a vehicle. This set of interactions is either amplified, or in certain cases eradicated, by technology. With app-based taxi services like Uber and Kabbie, negotiations and choice happen from afar through digital interactions within the app. But the primary interaction of recognition when the vehicle approaches you is a ritual which is not lost. You still identify the vehicle through means such as the licence plate and a wave or a nod to get the driver’s attention.

Technological negotiations, on the other hand, infer a certain amount of pre-knowledge and affluence – such as the ability to procure a smartphone, learn how to use it and have a functioning bank account to connect to certain services. You might also need to pre-emptively release some information about yourself, which may aid personalised services but at the expense of privacy. The technology might not be very inclusive, with certain segments of the populace automatically locked out – some older people don’t own smartphones and many do not believe it is safe to share bank details. Technological services can thus be seen as not very egalitarian.

With the advent of autonomous vehicles (AVs), there are bigger questions. With whom does a prospective passenger negotiate when there is no driver? How do they know the vehicle has seen them? Can they wave at a cab and have it slow down for them? Will the act of hailing a cab disappear completely?

We believe that the act of hailing or calling for a taxi is an egalitarian act that shouldn't be lost with the introduction of technology.

We believe that the act of hailing or calling for a taxi (don't shout at London cabs by the way, it's not really allowed) is an egalitarian act which should not be lost amid the growing tendency to remove or lose human interactions in service design with the introduction of technological media.

So let's dig deeper into the set of interactions and the needs which make up the background of hailing a cab. Our aim is to design a set of behaviours that future AVs should follow, in order to make for a successful driverless experience – at Level 5 / 6 autonomy.

Understanding the hail – Proxemics

Cabs can be hailed by anyone – a simple wave of the hand does the trick. Consider the different interactions that occur when you are negotiating with the driver; there are certain needs and potential problems which both actors need to consider. We can then extrapolate these interactions by considering AVs as social robots.

To provide some context, let's consider proxemics – the study of space and how people perceive and interact within their immediate surroundings and the actors within those surroundings.

“People have a definite sense of personal territory – intimate, personal, social and public. Different types of interactions take place in different zones. Eg you move in close to scribble your idea on the board, and then you step back to reflect on it… These rhythms are present in all our creative activities (proxemics).”

Wendy Ju – Rules of Proxemics / Design of Implicit Interactions, Stanford University

As Ju mentions, the different zones around a person and the nature of the zone’s perception define the interactions. Public, social, personal and intimate zones surround the person we’re focusing on – we’ll call them the hailer for short.

To examine how proxemics works, look at the above diagram which shows the various zones around a person hailing a present day cab with a driver. The cab, when being interacted with, moves from position 1 in the public zone to the personal zone in position 4, when the hailer perhaps gets into the vehicle. For argument’s sake, let’s assume that the hailer is not using a phone or smartphone during the interaction.

The intimate zone, just around the hailer might, or might not be, encroached by the cab or the driver. It is a zone which might travel with the person as he gets into the cab and it’s the zone reserved for the most intimate interactions – with friends, family, or loved ones. When talking to the cab driver once inside the vehicle, this intimate zone might be broached in certain instances, but we will not consider it in great detail in our hailing exploration.

Field Observation

In position 1, the cab and the hailer are in a position to interact, but they are not actively signalling to one another.

In position 2, in the public zone, the interaction between the two actors is subtle and implicit. The hailer sees the cab, sees the signal that it is free for a pick up (the yellow light is on) and proceeds to wave at the cab. The cab might or might not see the hailer. If seen, the cab driver moves closer to the hailer, across the road, either by changing direction or slowing down.

In position 3, in the social zone, the interaction might be more pronounced as the negotiation between the actors intensifies. The hailer might still be gesturing to the cab, and would be able to see the driver. A nod from the driver completes the human-to-human interaction and the cab moves towards the hailer, gently slowing down. The nod is critical as an element of recognition when there is more than one hailer present (perhaps outside a railway station). There is an “if” in this scenario: if the cab is not able to stop close to you and has to beckon you over or point for you to approach them elsewhere (quite typical in London, where there are curbside areas where vehicles cannot stop). This is a complex interaction, as the knowledge about permitted stopping areas lies with the driver and they have to convey this to the hailer in the most effective way possible.

In position 4, in the personal zone, the cab either parks close to the hailer or the hailer approaches the cab. In the personal zone, the driver can make an assessment about the hailer and ask where they want to go. This assessment is important for the driver to decide if they need to get out and lend a helping hand or activate the ramp to help the person to get in.

In return, the hailer speaks to the driver, lets them know where they need to go or perhaps asks for help. The hailer finds and opens the door and makes themselves comfortable, prompting the driver to proceed to the destination.

So we can see that the act of hailing a cab contains many interactions, which if done successfully, complete the narrative. Now let's consider the case of no driver – a robotic AV interacting with the hailer. Let's look into the possible problems, again considering the proxemic zones.

Problems and Opportunities

The robotic actor – the AV – has to take in multiple inputs and provide relevant feedback by interacting with the hailer throughout the different zones. A steady ramping up of signals and gestures from the public to the personal zones.

Beyond the public zone, the AV cab is a travelling observer, passively looking for obstacles, observing traffic rules, and looking out for Hailers beckoning to the vehicle.

Questions which might pop up now are:

- What gesture and what negotiation would trigger the “hailing” set of interactions by the AV cab?

- When would the AV cab move from being a passive observer to an active participant?

- How does the hailer know that the AV cab is empty and is open to negotiation? Will it be as it is now, with a lit sign on the roof, or could it be a lot more apparent?

In the public zone and position 1, the AV cab has “seen” the trigger gesture by the hailer and has to respond accordingly. It also needs to compute the best position to move into to close in on the hailer. From the hailer’s perspective, there needs to be feedback about the vehicle’s intent and whether there has been recognition at that point. The AV cab also needs to provide feedback to other road users that it is taking an action.

Some of the questions here might be:

- What sort of feedback mechanisms, both implicit (eg a change in direction towards the hailer) and explicit (eg blinking of lights), should be employed by an AV cab to speak to the hailer?

- How does the AV cab negotiate with other traffic elements to move closer to the hailer?

In the social zone and position 2, the AV cab needs to move closer to the hailer and further negotiations need to take place. Here the hailer needs to see more feedback from the vehicle and the vehicle, in turn, needs to understand the context of the hailer – where the person is, in case the AV cab cannot safely stop close to them.

We can ask:

- What sort of feedback from the AV cab confirms that it has seen and is reacting to the hailer?

- What is the context of the pickup? What's the position of the hailer on the road and can the AV cab stop there? Is there more than one hailer, and if so, how does the AV cab signal who it is picking up?

- When are they hailing the vehicle? What are the traffic and road conditions for the AV cab to take into consideration?

- How does the AV cab provide feedback to the hailer if it cannot stop where they are?

In the personal zone and position 3, the AV cab needs to understand the person who is hailing, their needs, and react accordingly. There should be a provision for the hailer to ask questions of the machine or ask for help if needed.

The questions here are:

- Who is the person hailing? What are their mobility needs?

- How can the AV cab assist or welcome the hailer, without the presence of a driver? For example, by opening the doors.

- Why is the hailer getting the taxi and what sort of feedback must be provided to them?

- Where is the hailer going?

We will now proceed to figure out how to answer these questions. But before moving on, let’s see if we can leverage some of the advantages that technology can provide over a human cab driver.

Advantages of Technology

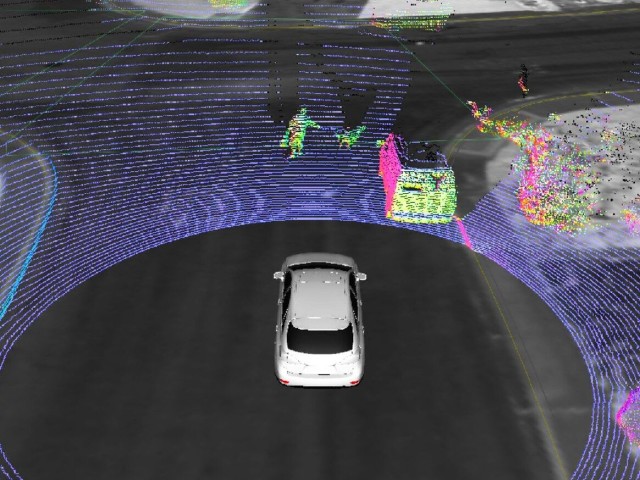

In the same way that human drivers have their advantages, robotic AVs might also have useful quirks in terms of sensorial recognition and personalisation that could be used in taxi services.

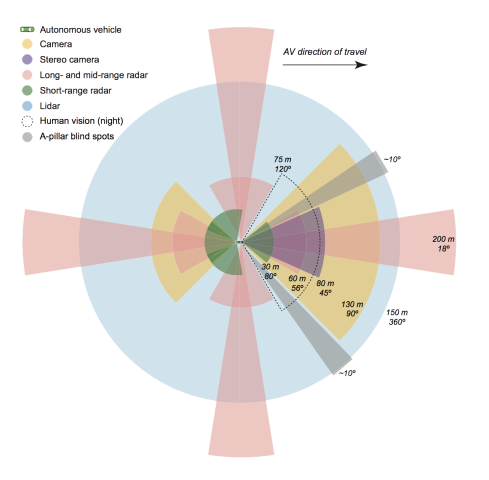

Our fictional AV cab will be a sensor-rich vessel, capable of making vast numbers of calculations and may possibly have a higher memory capacity than human drivers. A fantastic bit of research from the University of Michigan called Sensor Fusion compares the reach of humans with AV sensors and is quite revealing:

The research also compares the strengths and weaknesses of humans and machines, highlighting the decreasing cognitive function of fatigued or overloaded humans, when formal recall becomes impaired and short-term memory is limited.

However, despite the clear advantages of sensors, there are key tests in which AVs still do not perform adequately, due to their inherent disadvantages – their inbuilt limitations, fidelity, and inability to compute inferences via multiple sensorial data in real-time. Thus some traffic scenarios still pose a challenge for automated vehicles.

You’re probably safer in a self-driving car than with a 16-year-old, or a 90-year-old… But you’re probably significantly safer with an alert, experienced, middle-aged driver than in a self-driving car.

Historically, human drivers are better at adapting to adverse situations, recognising faces, patterns, contexts and human emotion, but AVs are making great strides in that respect. An example put forward by MIT Technology Review was that of facial recognition algorithms and cameras which are getting so incredibly powerful that companies in China are building systems which perform better than human beings. This has prompted their use to authorise payments or catch trains (controversially, these systems also have the capabilities to detect IQ and sexual orientation). Companies like Face++ are providing “cognitive services” for companies to employ low-cost facial and body recognition systems within their products and environments. What fascinating and somewhat spooky times we live in.

So what does this mean for our AV cab, which might have the potential to exploit such technologies in the near future? From a service design perspective, personalisation through identification could be one such advantage, if designed well, without sacrificing privacy. For example, a person takes an AV cab on ten occasions, and on the eleventh time it is tailored to them, without identifying their name via any database.

Another advantage is repeatability of interactions for the identified person. For example, if the user is vision-impaired, the sliding doors could automatically open to welcome them – every time. This tireless consistency could be the key to building trust.

By considering these questions and opportunities we can draw out a set of concept interactions to be carried forward for testing – with actual people and actual contexts.

Early concept and testing procedure

Going back to each of the zones, we can draw some hypotheses about how the AV cab should interact with the hailer, both on the first time they use the service and during further interactions.

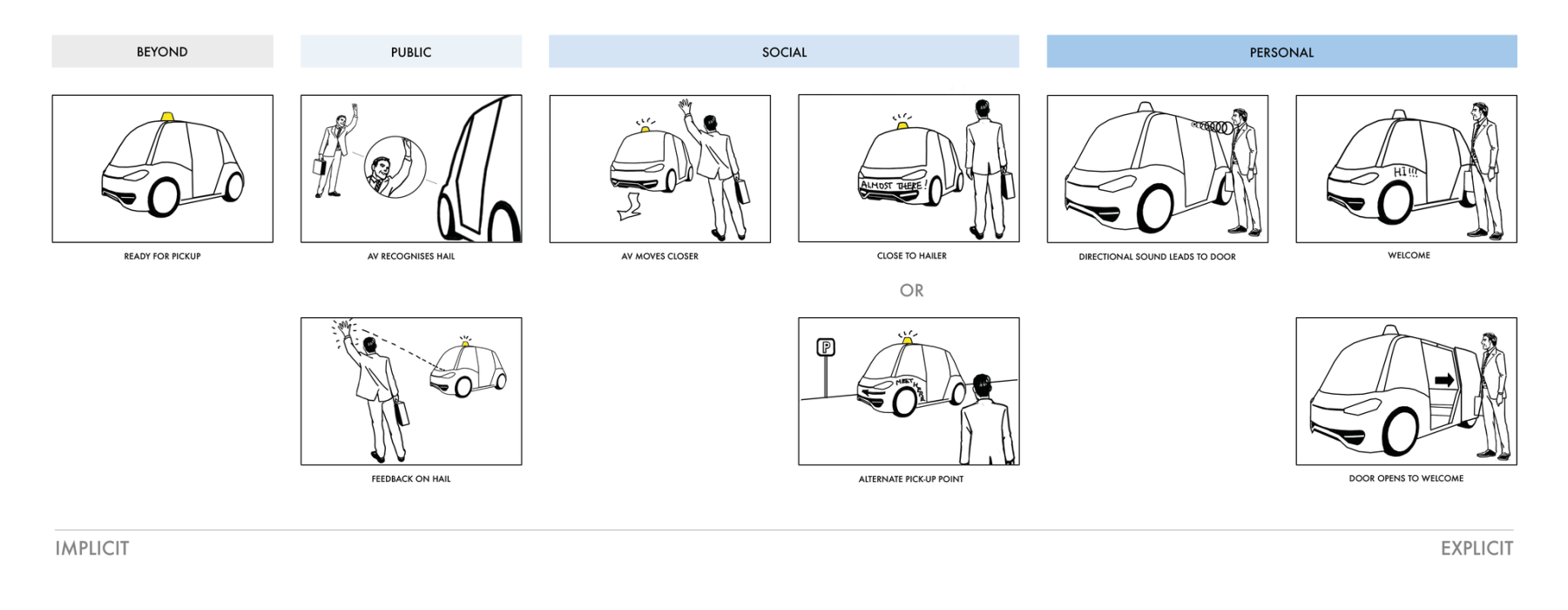

Firstly let's look at the interactions and how they might change across the various zones. This gives us a mental model for playing with various media and communication techniques.

Implicit, generic and impersonal interactions in the public zone could enable communication from afar. For example, a simple change in direction or the AV cab slowing down on observing the hail gesture is an implicit interaction. You wouldn't want the cab to shout out your name from a distance, would you? It wouldn't be very discreet.

Explicit, precise and personal interactions in the personal zone enable closer communication. For example, welcoming the hailer with a few words or opening the doors for them is an explicit interaction. A personal gesture from close range.

Thinking about some of the questions and opportunities we talked about earlier, we can also make an interesting observation: there is a gradual shift of initiative in each zone. From the hailer calling to the AV cab, to the AV cab taking charge and welcoming the hailer as it gets closer – a human-to-robot handover of initiative,

It is also important to note that in order to make this as inclusive as possible, we should consider a range of interaction channels, from audio to visual.

Let’s move on to detailed hypotheses for each zone. We have drawn out a storyboard of interactions from the first interaction with the AV cab up to entering the vehicle. Storyboarding scenarios helps us establish a preliminary narrative of how the actors talk to each other on a stage. Any hypothesis we might start off with is a “best case scenario” where all the interactions work perfectly. But these are subject to change and iteration during testing.

Public Zone Interactions

The AV cab displays visually that it is empty and ready for a pickup from afar, similar to present-day cabs. The visual medium is similar to that of a lit sign atop the vehicle.

The hailer gestures to an empty AV cab and is seen by it. The AV cab acknowledges the interaction via a visual mechanism (perhaps the sign itself flashes), and then proceeds to move towards the hailer just as a human driver would (an implicit interaction).

_During this thought experiment, we have applied seven of the 21 design principles we outline in our book. We'll share them as they come up through the post in this format: _

DESIGN PRINCIPLES APPLIED:

08. ACT HUMAN, BE ROBOT

Utilise both human and machine advantages by instilling the beneficial nuance of human behaviour while exploiting technological benefits ie quick response times of machines.

16. THE AV SHOULD TELL US IT UNDERSTANDS ITS SURROUNDINGS

It is important that people know what the AV see’s and that it understands where it is for them to trust it. An AV can also communicate an approaching hazard to vehicles behind it.

Social zone interactions

Further visual indications by the AV cab are required to confirm the acknowledgement of the gesture and then the AV cab moves into its correct position on the road (a mixture of explicit and implicit interactions).

The vehicle analyses the road and the hailer’s position to figure out if it can get close. If it cannot, it needs to signal its intent to move to a place where it can pick up the hailer, perhaps via a screen or some other visual medium.

The hailer acknowledges the movement of the AV and notices where the AV moves to.

DESIGN PRINCIPLES APPLIED:

17. THE AV SHOULD TELL US WHAT IT’S GOING TO DO

While driving we communicate our intent by using indicators while turning or slowing down to let people cross. This should be the same for the AV so that people understand it.

18. THE AV SHOULD RESPOND WHEN INTERACTED WITH

Feedback mechanisms are needed for people to know that the AV has seen or heard them, ie when being hailed, an AV should acknowledge the human interaction and respond.

Personal zone interactions

As the hailer approaches the AV cab, it guides them to the door using directional sound which follows the hailer.

The AV cab analyses the hailer’s situation – physical ability, number of bags, etc, and adjusts itself accordingly, by opening the boot (trunk), deploying ramps etc. The doors do not open automatically the first time of use, giving the hailer complete control for using the AV. A trust-building technique for autonomy.

Or, since it is the second time the hailer is using the service, the AV cab knows what is required of it – for instance, the best seating position to suit the person and does open the door automatically.

The cab then opens the door to welcome the hailer into the vehicle, automatically if necessary.

The cab speaks to the hailer to ask for the destination.

DESIGN PRINCIPLES APPLIED:

03. EMOTIONAL AND FUNCTIONAL NEEDS**

There’s more to a journey than simply the functional need to get from A to B. Any journey includes many human and emotional needs such as comfort and human interaction.

06. ESTABLISH AND MAINTAIN A HUMAN ROBOT RELATIONSHIP

If a stranger is rude to you, you won’t want to interact with them again. The same applies to a robot. The AV must acknowledge and reciprocate human manners and behaviours. For example, when a person waves to thank an AV for letting them cross the road, the AV must display acknowledgement of the gesture back to the human.

21. THE AV SHOULD BE EMPATHETIC AND INCLUSIVE

People should feel independent and empowered around an AV irrespective of whether they have mobility issues or not. So affordances should be designed to enable people to feel respected and treated with discretion, ie the AV’s floor base lowering itself to allow any person to enter without aid.

This storyboard now forms the basis of our experiments and test procedure. Most of these interactions start from the assumption we made early on – that the hailer will not be using a smartphone or related device. The presence of such devices would definitely remove some constraints, but constraints can be beautiful and the application of constraints here will make the system we devise more egalitarian.

Experimentation and iteration

Since this is a thought experiment, we’d like to talk very briefly about the possible experiments and research ideas we can draw out from the storyboard. The essence of the experiment lies in changing the variables of the interaction and the nature of the experiment itself – whether it’s real or virtual – while devising the tests.

Before we progress, we should note that we are only going to consider the user experience of the interactions and not worry too much about technological complexity. This is not a cop-out – we are doing this because we cannot predict the rate of technical progress. For instance, gesture recognition as it stands right now is in its infancy. People moving around, environmental conditions, the background colour occluding the colour of clothing or skin tone – there are numerous factors which need to be taken into consideration. The human eye and brain are able to do this with high fidelity, but machines are only just getting there. To employ gesture recognition in our scenario, the AV cab would have to recognise the hailing gesture from one of many that people might employ to hail a taxi, not to mention separate the gesture from all the noise in the background. So we will not let technological complexity completely distract us.

A. Variable – Feedback Mechanisms and Channels

The channels the AV cab could use to communicate with the hailer might vary. Visual signals over longer ranges could be light signals or textual data. For instance, blinking headlights could acknowledge the hailer or words on the AV’s external screens, if the vehicle is in the social zone. Similarly, the AV can shift reliance to audio channels as it moves into the personal zone, similar to that of a human driver interacting with the passenger (though not completely, in case the hailer is hearing-impaired). Advances in directional and projected sound might make a communication to targeted persons in a group of people possible.

B. Variable – Implicit and Explicit Interactions

We have spoken about the range of interaction types, from implicit in the public zone to explicit in the personal zone. Varying the degree of implicitness as the AV cab approaches the hailer would be a big part of experimentation. For instance, starting with the language of physics – movement of the cab, via changes in direction and acceleration or deceleration – and ending with explicit interactions like that of opening the doors to welcome the hailer.

C. Variable – People Who Would Be The Hailers

The recruitment of test subjects who would act as hailers would be critical in getting a range of inputs. Interaction strategies would vary based on qualitative testing with a spectrum of people – from the young to the old, from the sighted to the vision-impaired, to people with varying mobility difficulties. Qualitative experiments will provide early cues to the directions which are right for the scenario we construct. For instance, working with people with limited mobility, be they weighed down with bags or moving on crutches, will help us identify the best methods to welcome a hailer into the cab and help them settle in.

D. Variable – Research Conditions and Style

Qualitative research conditions could vary from creating very believable environments to using virtual reality techniques for testing with actual people.

Believable field environments could be very much like the Ghost Driver set of experiments conducted by Wendy Ju’s team at Stanford, which featured a hidden driver to simulate an autonomous vehicle in experiments with pedestrians and cyclists.

Their tests were conducted using the “Wizard of Oz” framework which is a user experience (UX) and dramatics technique which immerses the testers in the environment and makes them believe in the actuality of the interactions they are having with a makebelieve AV. (Ghost Driver is an extremely worthwhile read into people-centered research techniques.)

We could make virtual environments to create similar settings for AV and pedestrian interactions – which is one of the methods laid out in the prototyping and testing chapter of the book. While a lot more intrusive in terms of testing, they do provide for a lot more control of test conditions and environments for the researcher. With the help of virtual testing, we’ll swiftly be able to move the scenario beyond a thought experiment.

You can read more about the virtual prototyping and testing for AVs in the book. Download the book for free here and simply click “How To Test The Untestable” in the contents.

The ideas discussed in this post, and it the book at large, are intended to be a conversation starter – not the final word. Please get in touch with any thoughts and feedback – mobility@ustwo.com

You can also add to and amend the design principles directly github!